Project 2: Large-Scale Data Analysis using Hadoop MapReduce |

< Previous |

Objectives

The goal of this project is to provide hands-on experience with distributed data processing using Hadoop MapReduce. You get to analyze and extract insights from a real-world, large-scale dataset (at least a gigabytes in size) by designing and implementing efficient MapReduce tasks. This project emphasizes understanding the scalability, and performance characteristics of the Hadoop ecosystem.

Description

In this project, you get to select a large dataset — such as web logs, social media streams, sensor data, or public datasets (e.g., Common Crawl, OpenStreetMap, Enron emails, Kaggle, etc.) — and perform an end-to-end data analysis workflow using Hadoop MapReduce.

You may complete this individually or in a group of at most 3 students in the class.

You get to:

- Clean and preprocess the data to prepare it for analysis (if necessary).

- Identify one or more analytical tasks or questions that can be addressed using MapReduce.

- Implement custom Map and Reduce functions in Java or Python.

- Run your code on a Hadoop cluster (local pseudo-distributed or remote/cloud-based).

- Analyze the results and evaluate the performance of your jobs (e.g., runtime, job counters, bottlenecks).

Possible analysis examples:

- Frequency analysis of words or phrases across a large corpus (e.g., n-gram distributions).

- Temporal or spatial pattern detection (e.g., event correlation over time or geographic location).

- Graph-based analytics (e.g., page rank computation, degree distribution of nodes).

- Anomaly detection in logs or transaction records.

- Sentiment analysis using a lexicon-based approach at scale.

Deliverables

Code Repository

Well-documented code with clear instructions for execution.

Report

Describe your problem selection, any data preprocessing, your system design, implementation, results, and performance evaluation.

Presentation

Create a video presentation about your analysis. Include background information about the problem that you chose. Describe the dataset(s) that you used. Briefly explain the set-up of your Hadoop system. Demonstrate your analysis actually running. Summarize the results produced / key findings. Also include implementation challenges and lessons learned.

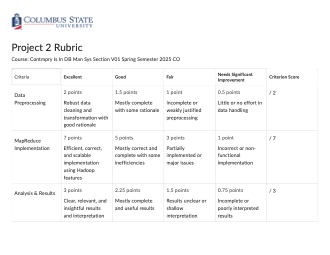

Rubric:

Submissions

CougarVIEW Assignment

Submit the following to the CougarVIEW Assignment:

- Code repository

- Report

- Slides (if any) that accompany your presentation

CougarVIEW Discussion

Submit your video presentation to the CougarVIEW Discussion. Review at least 2 other presentations and comment on something you liked about their approach or analysis that you could use in the future.